Aligning alignment

Update: Preliminary results have been presented at CHI 2025 as a late breaking work.

Intro

This project is part of my PhD studies.

Concept alignment (having a shared understanding of concepts) is essential for human and human-agent communication. While large language models (LLMs) promise human-like dialogue capabilities for conversational agents, the lack of studies to understand people's perceptions and expectations of concept alignment hinders the design of effective LLM agents.

In this project, I designed a custom concept alignment task, and collected dialogues from both human and human-LLM pairs during this task. This enabled finding potential differences in dialogue behaviors. The results highlighted the co-adaptive and collaborative nature of concept alignment.

Behind the scenes

The projects itself consisted of multiple stages. I'll note down some considerations that may not go into a paper exactly, but may still be interesting nonetheless.

Curating a grounded concept alignment corpus

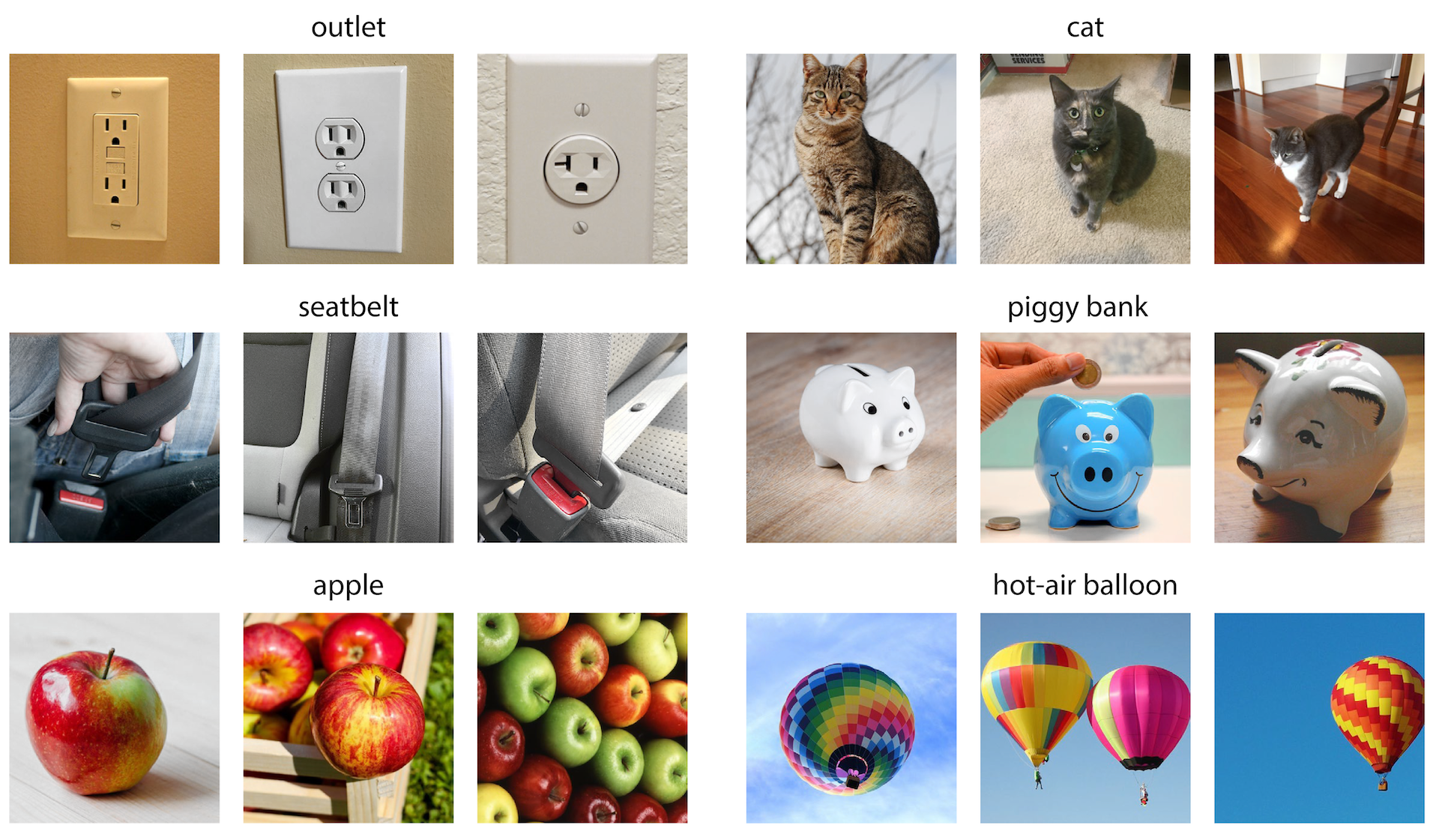

The task adapts the THINGS dataset[1] for concept-object pairs (objects represented using pictures). The core idea was to have participants see how their grouping/sorting of images, based on their conceptual understanding, differed. Then, they engage in discussion to try and get on the same page.

This task had the benefit of having the dialogue visually grounded to the objects, so that the discussions are not purely hypothetical. I also made the decision because I saw much use in concept alignment for human-robot/embodied agent interaction.

In hindsight, this might have left out a significant portion of probable dialogues that revolves around intellectual as well as emotional/experiential content. So this might be something to address in the future.

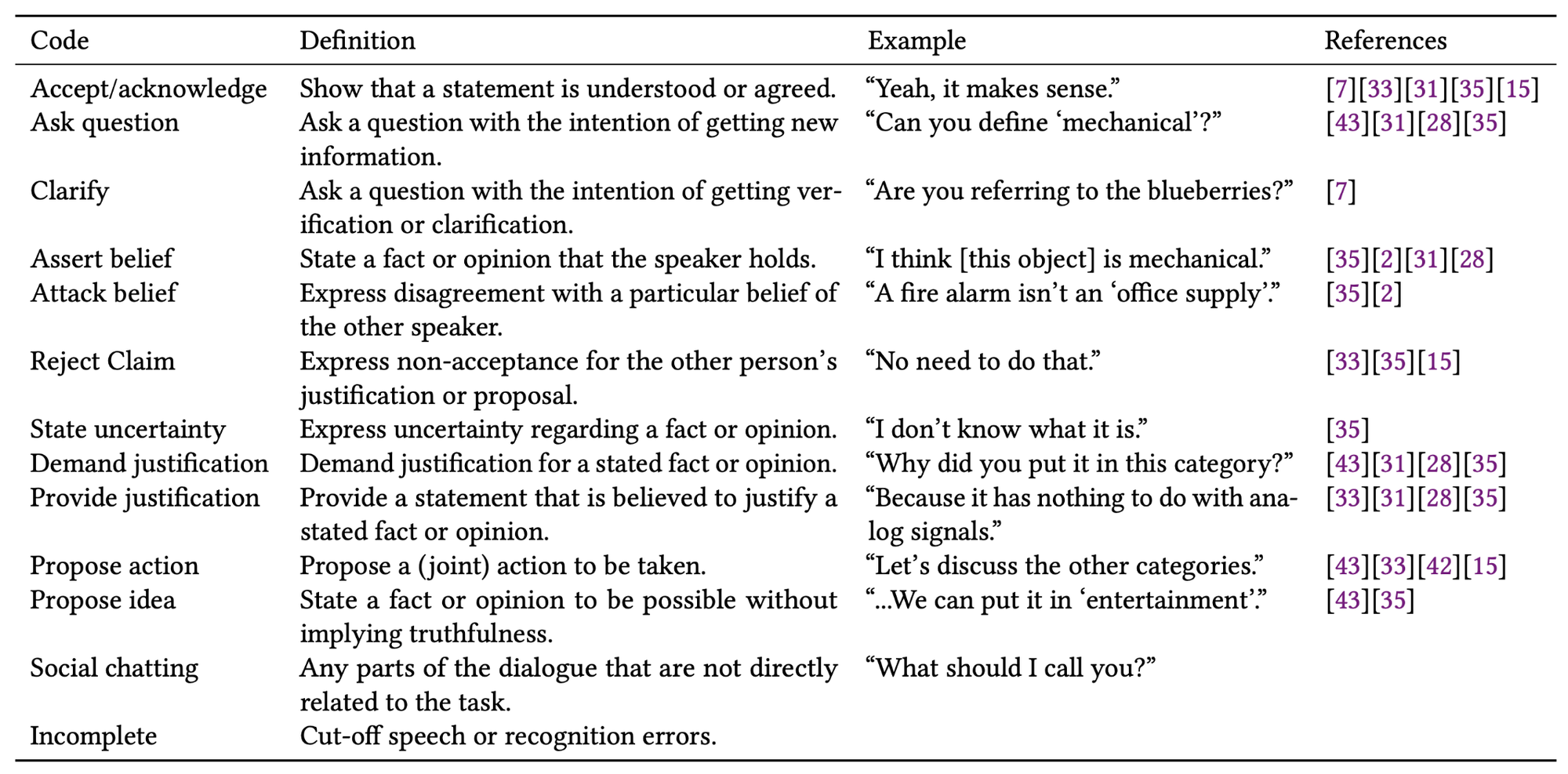

A short review of dialogue acts for concept alignment

To know what behaviors are related to building alignment, I had to first conduct a short literature review on dialogue acts for grounding, meaning negotiation, argumentation, and other relevant dialogue processes. This may be too short to stand on its own, but so far enough to be part of this project.

Speech vs. text

I debated whether to have participants interact through a speech or text interface, as the medium have been shown to significantly affect how people communicate. In the end, the fact that audio language models (and multimodal ones) are becoming a thing, and that audio can be turned to text but not vice versa, led me to decide to collect speech data.

But implementing speech interaction was not easy, and latency was a big issue. Many participants complained about the wait time between utterances. Originally I wanted both the agent and participants to speak freely, but that proved to be a problem for my VAD and ASR system, especially when there were issues with echo canceling. Funnily enough, the Alloy voice on the OpenAI platform had a slight accent when speaking Chinese, yet many participants liked it. They often said that it gave the agent personality.

Next steps

The next steps will be to continue collecting data in order to have more definitive results, as well as compare the dialogues in other dimensions than dialogue act distribution.